Continuing my research within the Voice Assistant space. I was asked to consult on the Google Assistant platform for 2018’s “Make Google Do It” campaign by Google. To highlight firstly how the platform works and secondly what is possible to do with first party functionality.

Taking a deep dive into the Google Assistant platform after working on Amazon Alexa’s was really interesting. As I could now firstly compare the two side by side and see if either platform had room for improvement and secondly explain to copywriters who was tasked with creating scenarios around the platform in various languages, what was possible now in April 2018. The focus was to work on the Google Assistant DOOH campaign as an awareness piece to get the UK, French, German & Italian population to use google to achieve common tasks with the assistant mainly using their Android or iOS device. Key areas I was assigned to explore were the following. Each area I was asked to explore how it works in English and French plus provide information for Google Assistant in Italian & German teams.

After spending some time exploring the functionality of what Google Assistant can I started to see how else I could combine Voice commands with the Phones Camera. Google Lens was one of Google’s biggest announcements in 2017, but it was a primarily a Google Pixel-exclusive feature at launch. At Google I/O 2018, Google announced that Google Lens was coming to a lot more phones, although Google Lens isn’t an app but as a service bundled with other Google apps as of July 2018. These include. Google Translate, Android Camera App, and the Google Assitant.

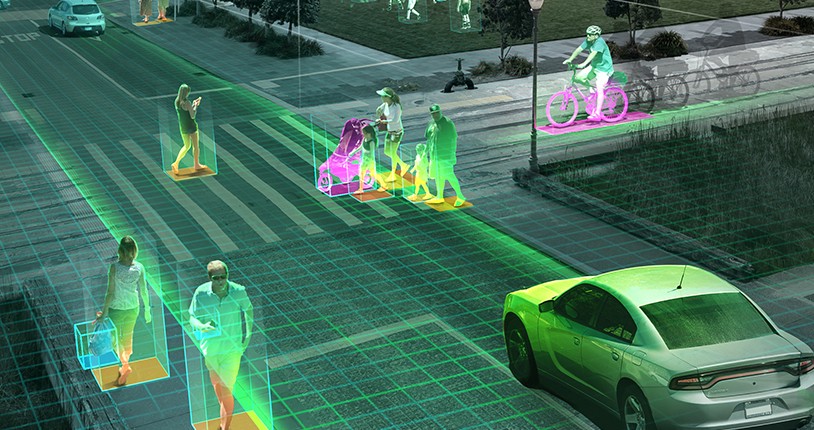

Google Lens is a machine learning powered technology that uses your smartphone camera and Convolutional Neural Networks for Visual Recognition (CNN) to not only detect an object but understand what it detects and offer actions based on what it sees. But my next question was can a developer make their own Apps with Lens? The answer is yes. The Google Cloud Vision and Video Intelligence APIs give you access to a pre-trained machine learning model with a single REST API request. But what do those pre-trained models look like behind the scenes? In this video we’ll uncover the magic of computer vision models by breaking down how Convolutional Neural Nets work under the hood, and we’ll end with a live demo of the Vision API.

Learn more here:

Cloud Vision API → https://goo.gl/1OGqoC

Cloud Video Intelligence API → https://goo.gl/p2X9xn

What is Google Lens?

Google Lens is a super-powered version of Google Googles, and it’s quite similar to Samsung’s Bixby Vision. It enables you to do things such as point your phone at something, such as a specific flower, and then ask Google Assistant what the object you’re pointing at is. You’ll not only be told the answer, but you’ll get suggestions based on the object, like nearby florists, in the case of a flower.

Other examples of what Google Lens can do include being able to take a picture of the SSID sticker on the back of a Wi-Fi router, after which your phone will automatically connect to the Wi-Fi network without you needing to do anything else. Yep, no more crawling under the cupboard in order to read out the password whilst typing it in your phone. Now, with Google Lens, you can literally point and shoot. There are others out there such as Microsoft’s Azure Vision API and Intel’s OpenVINO plus a few others.

Google Lens will recognise restaurants, clubs, cafes, and bars, too, presenting you with a pop-up window showing reviews, address details and opening times. It’s the ability to recognise everyday objects that’s impressive. It will recognise a hand and suggest the thumbs up emoji, which is a bit of fun but point it at a drink, and it will try and figure out what it is.

We tried this functionality with a glass of white wine. It didn’t suggest a white wine to us, but it did suggest a whole range of other alcoholic drinks, letting you then tap through to see what they are, how to make them, and so on. It’s fast and very clever – even if it failed to see that it was just wine. What we really liked is that it recognises the type of drink and suggests things that are similar.

Brief history behind Google Lens

So after cataloging what Google Lens could do for the End User, such as Translate text within Google Translate and Assistant, Recognised products and offer similar products found with the Assistant, Recognised the different types of animals and plants when the camera app was pointed to it plus a many other features I wanted to learn about firstly How ‘Lens’ work within the context of Google and secondly what was the underlying technology that made it work, curiously. Could third-party developers use the technology behind it or was it closed platform? Fourthly I wanted to gain a greater understanding of how we got to this point in the history of Computer Vision. As you can imagine it was a slow series of incremental steps from understanding the biology of the human eye and the neural networks of the brain to create simple seniors that understand the world around them in Analogy and then into Digital.

So I started at the beginning, the field of computer vision emerged in the 1950’s. One early breakthrough came in 1957 in the form of the “Perceptron” machine. This “giant machine thickly tangled with wires” was the invention of psychologist and computer vision pioneer Frank Rosenblatt. See Timeline of Computer Vision. Biologists had been looking into how learning emerges from the firing of neuron cells in the brain. Broadly the conclusion was that learning happens when links between neurons get stronger. And the connections get stronger when the neurons connect more often. Rosenblatt’s insight was that the same process could be applied in computers. The Perceptron used a very early “artificial neural network” and was able to sort images into very simple categories like triangle and square. While from today’s perspective Perceptron’s early neural networks are extremely rudimentary, they set an important foundation for subsequent research.

Another key moment was the founding of the Artificial Intelligence Lab at MIT in 1959. One of the co-founders was Marvin Minsky and in 1966 he gave an undergraduate student a specific assignment. “Spend the summer linking a camera to a computer. Then get the computer to describe what it sees.” Needless to say, the task has proved a little more difficult than one grad student can achieve over a single summer! Minsky’s timelines were over-confident. But there was progress, for example, the understanding of where the edges of an object within an image are. The 70s saw the first commercial applications of computer vision technology. Optical Character Recognition (OCR) makes typed, handwritten or printed text intelligible for computers. In 1978, Kurzweil Computer Products released its first product. The aim was to enable blind people to read via OCR computer programs. The 1980s saw the expanded use of the artificial neural networks pioneered by Frank Rosenblatt in the 50s. The new neural networks were more sophisticated in that the work of interpreting an image took place over a number of “layers”.

Things started to heat up in the second half of the 90s. This decade saw the first use of statistical techniques to recognize faces in images; and increasing interaction between computer graphics and computer vision. Progress accelerated further due to the Internet. This was partly thanks to larger annotated datasets of images becoming available online. And in the past decade that progress with computer vision has taken off. Two underlying reasons have driven the breakthroughs: more and better hardware; and much, much more data.

Machine Learning & A.I – Key difference.

After getting some grounding and taking a Video course from Standford University’s School of Engineering I started to understand that connection between Machine Learning (ML), Artifical Intelligence (IA) and Computer Vision. (CV). Now we know that a product designer can design an experience using Google REST API to not just use the camera to pick out numbers and text from the real work but also understand the context of images and products. I wanted to better understand what was the difference between ML and IA?

Artificial Intelligence (AI) and Machine Learning (ML) are two very hot buzzwords right now, and often seem to be used interchangeably. They are not quite the same thing, but the perception that they are can sometimes lead to some confusion. Both terms crop up very frequently when the topic is Big Data, analytics, and the broader waves of technological change which are sweeping through our world.

- Artificial Intelligence is the broader concept of machines being able to carry out tasks in a way that we would consider “smart”. And, Machine Learning is a current application of AI based on the idea that we should really just be able to give machines access to data and let them learn for themselves. Artificial Intelligence – and in particular today ML certainly has a lot to offer. With its promise of automating mundane tasks as well as offering creative insight, industries in every sector from banking to healthcare and manufacturing are reaping the benefits. So, it’s important to bear in mind that AI and ML are something else … they are products which are being sold – consistently, and lucratively.

- Machine Learning has certainly been seized as an opportunity by marketers. After AI has been around for so long, it’s possible that it started to be seen as something that’s in some way “old hat” even before its potential has ever truly been achieved. There have been a few false starts along the road to the “AI revolution”, and the term Machine Learning certainly gives marketers something new, shiny and, importantly, firmly grounded in the here-and-now, to offer.

Understand & Training Neural Networks

What is a Convolutional Neural Network?

From the Latin convolvere, “to convolve” means to roll together. For mathematical purposes, a convolution is the integral measuring how much two functions overlap as one passes over the other. Think of a convolution as a way of mixing two functions by multiplying them. Convolutional neural networks are deep artificial neural networks that are used primarily to classify images (e.g. name what they see), cluster them by similarity (photo search), and perform object recognition within scenes. They are algorithms that can identify faces, individuals, street signs, tumors, platypuses and many other aspects of visual data.

Convolutional networks perform optical character recognition (OCR) to digitize text and make natural-language processing possible on analog and hand-written documents, where the images are symbols to be transcribed. CNNs can also be applied to sound when it is represented visually as a spectrogram. More recently, convolutional networks have been applied directly to text analytics as well as graph data with graph convolutional networks.

The efficacy of convolutional nets (ConvNets or CNNs) in image recognition is one of the main reasons why the world has woken up to the efficacy of deep learning. They are powering major advances in computer vision (CV), which has obvious applications for self-driving cars, robotics, drones, security, medical diagnoses, and treatments for the visually impaired.

Learn more here:

How Google CNNs work → https://goo.gl/W51CGk

How Google RNNs work → https://goo.gl/I7RChj

Define CNNs https://skymind.ai/wiki

How to Train a Convolutional Neural Network

Imagine you are a mountain climber on top of a mountain, and night has fallen. You need to get to your base camp at the bottom of the mountain, but in the darkness with only your dinky flashlight, you can’t see more than a few feet of the ground in front of you. So how do you get down? One strategy is to look in every direction to see which way the ground steeps downward the most, and then step forward in that direction. Repeat this process many times, and you will gradually go farther and farther downhill. You may sometimes get stuck in a small trough or valley, in which case you can follow your momentum for a bit longer to get out of it. Caveats aside, this strategy will eventually get you to the bottom of the mountain.

This scenario may seem disconnected from neural networks, but it turns out to be a good analogy for the way they are trained. So good in fact, that the primary technique for doing so, gradient descent, sounds much like what we just described. Recall that training refers to determining the best set of weights for maximizing a neural network’s accuracy. In the previous chapters, we glossed over this process, preferring to keep it inside of a black box, and look at what already trained networks could do. The bulk of this chapter however is devoted to illustrating the details of how gradient descent works, and we shall see that it resembles the climber analogy we just described.

Neural networks can be used without knowing precisely how training works, just as one can operate a flashlight without knowing how the electronics inside it work. Most modern machine learning libraries have greatly automated the training process. Owing to those things and this topic being more mathematically rigorous, you may be tempted to set it aside and rush to applications of neural networks. But the intrepid reader knows this to be a mistake, because understanding the process gives valuable insights into how neural nets can be applied and reconfigured. Moreover, the ability to train large neural networks eluded us for many years and has only recently become feasible, making it one of the great success stories in the history of AI, as well as one of the most active and interesting research areas.

Learn more here:

How to Train your CNN? → https://ml4a.github.io/